|

|||||||||||

|

Why Chess Programs Find Good Moves But Barely Understand Chess After All by Thomas Hall Thomas Hall (1957- ) studied computer science with a special interest in programming languages. He has been interested in chess since his youth. As expert of the Smith-Morra Gambit he created an as yet unpublished computer opening book for this chess opening. In recent years he has been particularly concerned with Artificial Intelligence (AI), starting a project to develop alternative methods of chess programming. This article is also available in PDF format. Contents:

Every user however, who lets a strong chess engine play against itself in analysis mode, will notice that the engine's assumptions don't often come true, especially when pursuing an opening variant from the start. Moves estimated as quite good initially may turn out to be bad later. Why is this? The program has fallen victim to the so-called "horizon effect" which affects all engines to the present day. To explain this it is worth examining the search methods of chess programs more closely. 2.0 The Minimax-Method The clause from game-theory that in such two-person games the sum of the gains (i.e. advantages) and losses (i.e. disadvantages) is always zero was already published in 1928 by John v. Neumann [1]. So a good move means that the opponent has got only worse moves, all of them in all variants leading to favorable positions which are therefore unfavorable for the opponent. Now we "only" have to define a favorable position in chess, and there is already a method to simply try out the moves more or less exhaustively on an internal board in the following way:

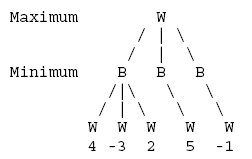

As you recognize, White has 3 choices to move in the start position. After White's first move Black has 3 choices, otherwise Black only has one choice. Typical chess positions naturally have many more, approx. 35 choices to move on average, but for now it's only about principle here. So this diagram presents a game tree that starts with the root node - that is the start position - and ends in five possible leaf nodes - the end positions. For each of the possible leaf nodes, the chess engine's so-called evaluation function calculates a number indicating the merit of the respective position, so e.g. positive values for positions in favor of White and negative values for those in favor of Black. According to the Minimax principle, White, when striving for the "best" move, must assume that Black will also select the "best" move, i.e. Black minimizes its positional values while White maximizes its own. In the above diagram Black would pick the move leading to the position with value -3 (i.e. the minimum) in the first subtree, and would pick the moves with the values 5 and -1 in the middle and right subtree, respectively. However White selects the maximum of these values, so from -3, 5, and -1 the maximum would be 5 and the decision would be made in favor of the middle subtree. A chess program would therefore assign a value +5 to the main variant. In chess the number of leaf nodes in the game tree quickly grows very large, and explodes exponentially. With n plies this amount would result in n35 leaf nodes. To make the process more manageable in practice, it was crucial to discover a possibility to cut off whole subtrees in order to be able to evaluate far fewer leaf nodes. Prerequisite for this possible pruning is move sorting by likely quality (e.g. first exchange moves then moves attacking opponent's pieces etc.) The procedure that really does the task is called Alpha-beta pruning. It is described more precisely in a Wikipedia article, among other references. For this discussion it is important that this method doesn't alter the essence of the Minimax algorithm. The quality of today's chess programs is mainly determined by the type of evaluation functions used, because it is only here that the program makes decisions about the assessment of good or bad positions. Early implementations only accounted for a few criteria in this context, particularly counting the material and recognition of mate and stalemate. But it was discovered very quickly that this was nowhere near adequate. Today evaluation function factors also consider factors like king's security, pawn structures and space advantage, to name just a few. 3.0 The Horizon Effect As an illustration of the horizon effect consider the following position taken from the documentation of Salomon Chess [2]:

Among the variants detected is White's capture of the knight and White's capture of the pawn. According to the Minimax algorithm, at this moment taking the knight is better for White because the engine stops any further computation and doesn't notice the consequences. After 4 plies the program is left in inky blackness; therefore this mistake occurred enabling White to ultimately reach a draw. In principle, a chess program behaves like a blind man who is feeling his way along the curb with his cane and only notices afterwards that something crucial has happened, e.g. the curb ended and he arrived at a crossing. When starting from the opening move, it is nearly impossible to reach a good middle game position without additional information due to the tremendous number of possible moves. Therefore all successful chess programs use so-called opening books, where, according to current opening theory, playable variations for both sides are stored. For the same reason, endgame tables are used. In these tables, all moves for all positions with (very) few men on the board are stored as results of preceding month-long computations. In these tables an engine can directly look up whether a certain move wins or not. If the move wins, it can also look up how many moves are needed to reach checkmate. A chess program with no such table to consult for a given move will inevitably examine a plethora of unreasonable move combinations. Though an apparent sheer waste of time, this does imply that tactical maneuvers within the game tree's scope (i.e. on the near side of the horizon) are not overlooked. This fact decisively contributes to the considerable success of chess programs over human opponents. During this quite aimless search the same positions can occur more than once. In order to avoid evaluating these positions twice, so called hash-tables are employed internally in order to store intermediate results of previous evaluations. The user can take advantage of these tables when analyzing games, more of this later. 4.0 The Evaluation Function and Its

Limits The pure material values of the men on the board can be accumulated easily, but then positive values for positional advantages like open rook-files and knight outposts or points of negative values for backward pawns and unprotected kings must be assigned. With the issue of king security alone a clear dilemma emerges: particularly in the middle game the king should be preferably protected behind a line of pawns, but this piece becomes an active piece in the endgame and then it is disadvantageous for the king to remain at the edge of the board. This raises the question of where exactly the endgame begins, and if this can be defined by the remaining material alone. There are more matters of conscience for the programmers. For in order to eventually get the number of the position value, dynamic elements like piece mobility have to be brought in line with static elements like material values. 4.1 Sacrifices

Modern engines in particular believe that Black is a bit better off here and they typically want to play 20.Qe2?. White however has a clear winning line after 20.Rxg7!, for example: 20.Rxg7 Rxg7 21.Qxh6 Rg8 22.Qh7 Ne7 23.Nf3 Kd7 24.Bg5 Re8 25.g7 Qd8 26.Bg6 Rg8 27.Rc1 b6 28.b4 axb4 29.axb4 Na6 30.Bd3 Nc7 31.Bxe7 Kxe7 32.Rxc7+ Qxc7 33.Qxg8 Qc1+ 34.Kf2 Qf4 35.Qh7 Ra2+ 36.Be2 Qf7 37.g8=Q 1-0 Granted, White's advantage after 20.Rxg7 becomes apparent after only five or six moves, but modern engines reach this search depth very fast. So what happens here? Because positional factors control the evaluation function, sacrificial moves are inserted at the end of the list of moves to be examined. The search proceeds incrementally, increasing the search depth slowly, that is ply after ply, in order to gain hints for the new sorting sequence in the current search depth from the preceding examination. Since the value of the sacrifice manifests itself after a relatively long period of time it's quite possible that the opportunity to play Rxg7 is missed by a cut-off operation of Alpha-beta pruning. So gearing the evaluation function towards predominantly positional factors would have precluded the examination of a move that makes no sense at first glance. Interestingly enough, several older programs such as Fritz 9 and even Fritz 10 still find the rook sacrifice, whereas Fritz 11 as well as Rybka do not locate this move. 4.2 Endgames

Most engines however, promptly take the rook on b3 ending up in a draw after 2.Kxc7. The short-term material advantage prevails over the long-term winning route which can be only detected by certain computations in the evaluation function. For the Alpha-beta procedure must have noticed success or failure in the first place and this can take a long time with the number and length of variants to be excluded. By the way, Zappa Mexico II seems to invest more effort here than others because this chess program found the key move 1...Rc4 rather quickly but needed a longer time in order to find the victorious maneuver 1...Rc4 2.Re2 Kf7 3.Ra2 Rd4+ 4.Kc5 Rd1 5.Kc4 Be1 6.f4 Bf2. 4.3 Fortresses Here is an example of a fortress:

5.0 Conclusions In spite of this computing power, very long variants absolutely cannot be tackled, although in the endgame extremely long lines to checkmate can be found, as can be gleaned from the endgame tables. An engine which wants to avoid trying out move variants in a hit-or-miss fashion, needs far more chess knowledge than that utilized in today's programs. Current programs cannot explain why a certain move is either good or bad because causal chains are not followed, thus the move and countermove of a variant is only the indirect result of a position value return to the game tree's root. Yet more is required in order to master chess. It's actually amazing that these inadequate methods generally still compute useable moves. In closing we return to the hash-tables mentioned above. Because these are not automatically deleted when a user steps through an already computed variant using the graphical frontend in analysis mode, the horizon effect can actually be mitigated in this way: if the program detects hash-table values of recurring positions that were already computed down the line, it can use them to find other, hopefully better, moves. But overall that's small comfort. When it comes to the truth in chess, one can currently only rely on the endgame table bases. Ultimate certainty concerning the quality of chess moves can, in general, not be gained by the Alpha-beta algorithm. [1] Von Neumann, John: Zur Theorie der Gesellschaftsspiele

Math. Annalen. 100 (1928) 295-320 See also:

|

The

Advertise to Single insert:

|

||||||||||

|

|||||||||||